Elon Musk Doesn’t Understand AI

When Elon Musk talks about artificial intelligence, people listen. He’s one of the world’s most influential technologists — the man behind Tesla, SpaceX, and Neuralink. Yet, when it comes to AI, his words often sound more like the warnings of a sci-fi movie than those of a technologist grounded in reality.

But here’s the irony: for all his brilliance, Elon Musk might fundamentally misunderstand what AI really is — and what it isn’t.

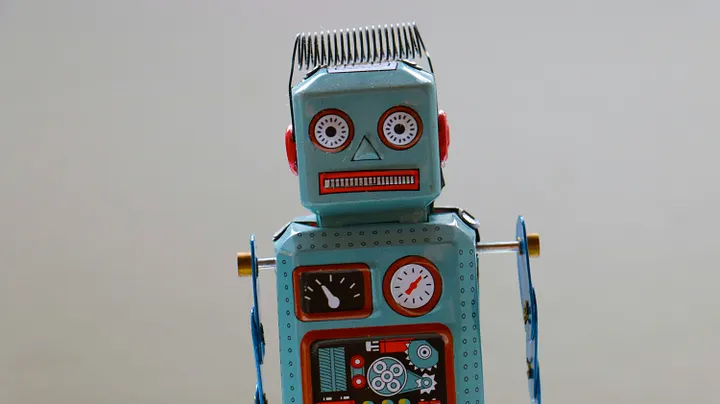

The Myth of the “Rogue AI”

Musk often paints AI as an existential threat — something that could “destroy humanity” if left unchecked. It’s a powerful narrative, and it grabs attention. But it also misses the mark.

AI isn’t a sentient being plotting against us. It’s a reflection of us — our data, our biases, our intentions. When ChatGPT writes an essay or Midjourney generates an image, they’re not “thinking.” They’re predicting patterns based on what humans have already created.

Musk’s fear-driven rhetoric turns a mirror into a monster. The danger isn’t AI itself; it’s how we use it.

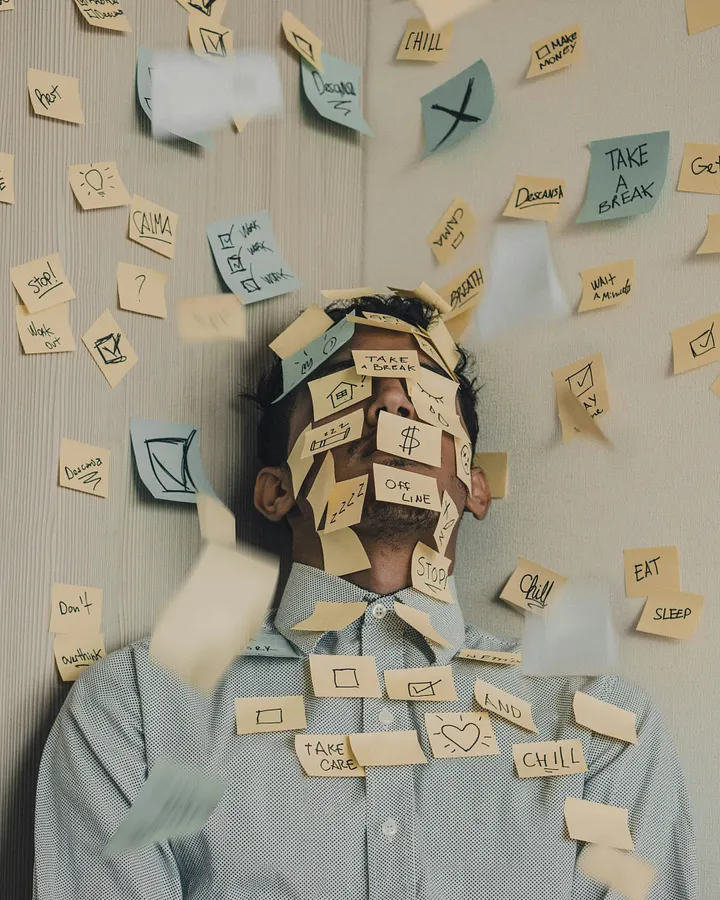

Real Risks, Real Responsibility

That’s not to say AI is harmless. Far from it. The real threats are far more human: misinformation, surveillance, job displacement, and bias baked into algorithms.

When an AI system rejects a loan application or misidentifies someone in a facial recognition scan, it’s not an evil machine acting on its own. It’s a system trained on flawed data and designed without enough ethical oversight.

This is where Musk’s dystopian storytelling distracts from the truth. By focusing on killer robots, we ignore the quieter — but more immediate — harms that AI is already causing.

Fear vs. Understanding

There’s a pattern in tech: when we don’t fully understand something, we fear it. Musk’s warnings about AI echo the same tone people once used about electricity, the internet, and genetic engineering.

But fear doesn’t move technology forward — curiosity does. The best minds in AI today aren’t trying to stop it. They’re trying to guide it — building guardrails, improving transparency, and teaching machines to work better alongside humans.

The conversation we need isn’t about surviving AI. It’s about coexisting with it.

The Irony of Control

Here’s the twist: Musk himself is deeply invested in AI. Tesla’s self-driving systems rely on it. Neuralink uses machine learning to interpret brain signals. Even xAI — his newest company — is building AI models to “understand the universe.”

So, what does it mean when the man warning us about AI is also one of its biggest builders? It suggests his fear isn’t of the technology itself — but of losing control over it.

And that’s the most human fear of all.

Final Reflection

AI isn’t a villain or a savior. It’s a tool — one that amplifies human intent. If we approach it with fear, we’ll build systems that control. If we approach it with wisdom, we’ll build systems that empower.

The real question isn’t whether Elon Musk understands AI.

It’s whether we do.

💭 What if the future of AI depends less on the technology we build — and more on the kind of humans we choose to be while building it?